Act of God, Act of Malice

A humanitarian’s take on Gaza, AI and the Future We Want

Hello everyone, and thank you again for reading (margin*notes)^squared. I truly appreciate your support over the past more than half year since I launched this newsletter. I’m so glad you’re here.

This week I planned to write about an experience last week in Kuala Lumpur.

Along with team mates, I had the honor of training parliamentarians and their staff from around the world on AI harms and risks, its awesome potential, and the urgent need to build effective governance structures. To have their attention for a half-day, and then to close the conference with a plenary discussion about reclaiming our policy future from silicon messiahs — it was a profound privilege.

But, alas, that’s not what I’m writing about today — despite having every intention to do so.

Yesterday morning I changed my mind.

I scrolled through Instagram and came across some clips that made me watch and rewatch, and think really hard. It was one of those moments when my values and principles come screaming to the fore and remind me that they’re there. And not to ignore them.

The first was an interview (here and here) with Tom Fletcher, the UN humanitarian chief, reflecting on his experience in Gaza. He explained how the place smelled like death. Felt like death. “Death on this scale has a sound and a smell which is impossible to describe.” It is a “smell that never leaves you,” Tom said. More so than any other disaster area he’d worked in — and he’s been to the most violent places on earth.

The second clip featured Hillary Clinton — someone I’ve long admired — speaking at the Doha Forum. She obfuscated and couldn’t bring herself to acknowledge the legitimacy of people’s shifting views toward Israel due to the tragedy that is Gaza, let alone acknowledge America’s role in it, let alone acknowledge what it is. She just racked it up with other conflicts – “conflict everywhere is horrific,” she said. She double downed on what she said at the Israel Hayom Summit a week before where she claimed pro-Palestinian youth have been propagandised and misinformed by social media. At first, I felt disappointed. Then I felt very sad.

I got sad because, like Tom, I know what death smells like, too. And tastes like.

And I don’t think Hillary does.

When death has so enveloped you that you forget what even life smells like — what it tastes like — it changes something in you.

In 2004, I was the first USAID humanitarian on the ground in Banda Aceh, Indonesia, after the Boxing Day tsunami. Some 200,000 people died there. Bodies stacked like cord wood. Buried in debris. Hanging in trees. Infrastructure gone; erased from the face of the earth.

I haven’t been to Gaza.

But I’ve been to death.

And that’s not something you ever forget.

You might wonder why I’m bringing this up in a newsletter that’s usually about decolonising tech policy and centering the margins in policy discourse. Why Gaza — why now — why here?

More Than Meets The Eye

You must understand that Gaza isn’t just about bombs, tanks, and artillery. Whatever your view of this tragedy, it is beyond dispute that Gaza is a Petri dish of cutting edge frontier technologies — surveillance systems, guided missiles, biometric databases, AI-enhanced targeting, LLM grooming, bulk eavesdropping and voice analysis. They are being deployed in the service of control and killing — the erasure of a people and their culture from the face of the earth.

Fueled by big tech companies and the smartest, sharpest technologists and engineers the world has ever seen. Microsoft provides cloud, compute and AI services. Social media companies remove and shadowban content too critical of Israeli behaviour.

Gaza is also about former colonisers empowering settler colonialism, allowing it to masquerade as self-defense. (The US alone has subsidised Israel with more than $25 billion in the past two years alone. See Council on Foreign Relations for latest datasets.) Western leaders — like Hillary but also many others besides — rightly condemn Russia’s invasion of Ukraine, but cannot fathom lifting a finger toward Israel.

As I write this from Southeast Asia, Israeli tourists pour in for holidays as if nothing unusual is happening back home. As if everything is normal. IDF soldiers come here for R&R to escape their own reality. Maybe even to deal with their own trauma, through drinking parties, drugs, and much else.

Palestinians can’t leave. They can’t come to enjoy the beautiful beaches of Southeast Asia. They can’t even enjoy their own beaches on the Mediterranean coast for that matter.

That’s what Gaza is.

And yes, it is powered by artificial intelligence. Not just in the missiles, drones, or surveillance — but in the advanced software systems that identify, sort, track, and then eliminate people. Journalists. NGOs. NGO workers. Humanitarians. Libraries. Universities. Hospitals. Mosques. Churches. Innocent children. Tens of thousands of innocent children.

These frontier technologies will soon be marketed as ‘battle-tested,’ sold to authoritarian regimes (and Western countries, too), and normalised under the logic of national security – AI warfare. Creating even more wealth for the tech companies.

It shouldn’t be this way.

For all that AI can do to help us flourish — to improve health, strengthen communities, and amplify creativity — it should never be used to erase people. Their land or stories or memories.

You know, the world has come together before: To ban landmines. To limit nuclear weapons. To regulate cloning. To eradicate smallpox.

In Aceh we came together using crude technologies like GPS, SMS, short-wave radios, mobile cell towers, and lots of human networking. Human intelligence drove the response to save lives, livelihoods and communities.

Twenty years later, we can come together again to draw red lines around how the most powerful technologies we have are used. The global majority has an important role to play here, even a leading one.

Check out Clause 0 from the Ethical AI Alliance and AI Red Lines.

Clause 0 is what should have been written — the foundational ethical safeguard that precedes all others. It is the limit that must not be crossed: that the use of AI, data, and cloud infrastructure must never enable or escalate unlawful violence, occupation, surveillance, or forced displacement. — Clause 0

It is in our vital common interest to prevent AI from inflicting serious and potentially irreversible damages to humanity, and we should act accordingly. — AI Red Lines

Frontier technologies need frontier ethics

So let’s be absolutely clear: frontier technologies need frontier ethics.

The world needs national, regional and international governance frameworks rooted in dignity, justice, and human flourishing — not domination.

Centered on people, not profit.

Focused on creating better lives — not extinguishing them.

The world’s greatest minds should never be deployed to build machines that further death or enable ethnic cleansing or mass surveillance. Should never be used in the pursuit of malice.

Robert Oppenheimer, after witnessing the first atomic bomb, famously muttered:

“Now I am become Death, the destroyer of worlds.”

I’m no Oppenheimer. But I’ve smelled death. Tasted it. It sits there deep in your throat. I felt it.

And I carry that forward with me, working to ensure our most powerful technologies serve life — not death.

In Kuala Lumpur, those parliamentarians said how they feel powerless up against Big Tech. Against a system and infrastructure that feels immovable.

My message to them — and to you — is this:

You are more powerful than you think.

Bending the Arc of Technology

You can cut through the rhetoric, the propaganda, the manipulation. Through your advocacy you can set policy and draw redlines, and hold them. Demand meaningful answers to tough questions. As policymakers and advocates who influence policy, you can bend the arc of technology toward justice and human flourishing.

My years in Aceh — from civil war, to humanitarian response, to longer-term peacebuilding — fundamentally changed my life and my outlook. It shaped how I see hardship, community, and responsibility.

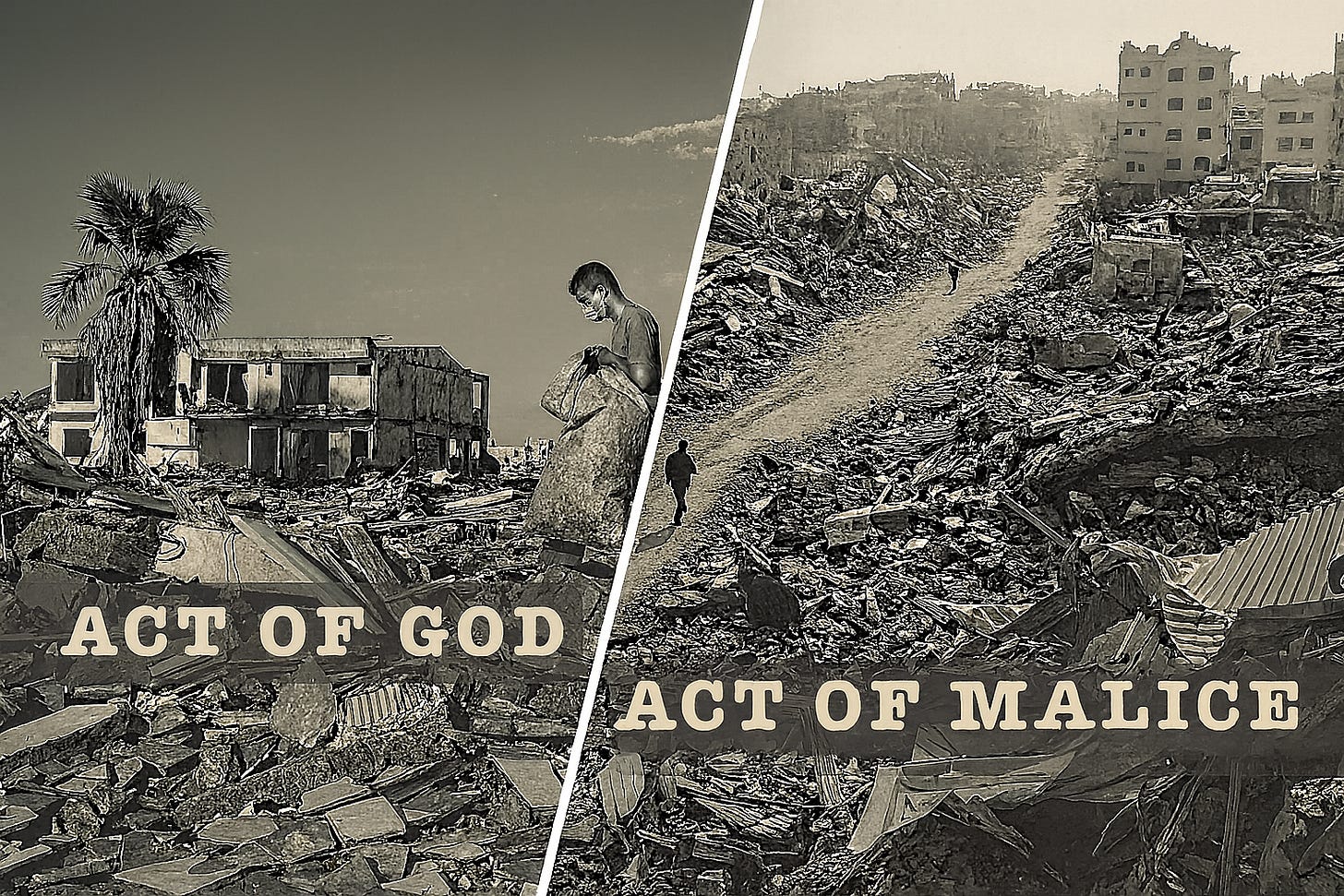

Aceh was an act of God — a natural disaster.

Fueled by a trembling earth.

Fueled by enormous weaves.

Fueled by the awesome and destructive power of nature.

But Gaza is an act of malice.

Fueled by technology designed by our brightest minds.

Fueled by hate in pursuit of endless vengeance.

Fueled by settler colonialism that takes, and takes, and takes.

Looking back:

Aceh taught me to serve human flourishing.

Looking forward:

Gaza teaches me to fight the frontier risks that harm and weaken our humanity.

I can accept an act of God and move forward. But an act of malice requires a plan to respond.

The work ahead is pretty clear. We must ensure that the wonders of AI and frontier technologies are directed toward dignity, equity, and human flourishing — for all people, all citizens, everywhere.

For the policymakers out there: you can shape and bend the arc of technological “progress.”

Now you are become Life. The shaper of worlds.

Another thing I learned in Aceh, having returned over the past 20 years… Life finds a way. When we come together in common purpose, life always finds a way.