Meta Is Reading Your AI Chats — and You Can’t Opt Out

< Unbounded: 2 Mins Edgewise

Unbounded: 2 Minutes Edgewise delivers sharp, fast takes on current events, fresh revelations, and just cool things. Provocative or hopeful or fiery — it will always be brief, always grounded, and always unbounded.

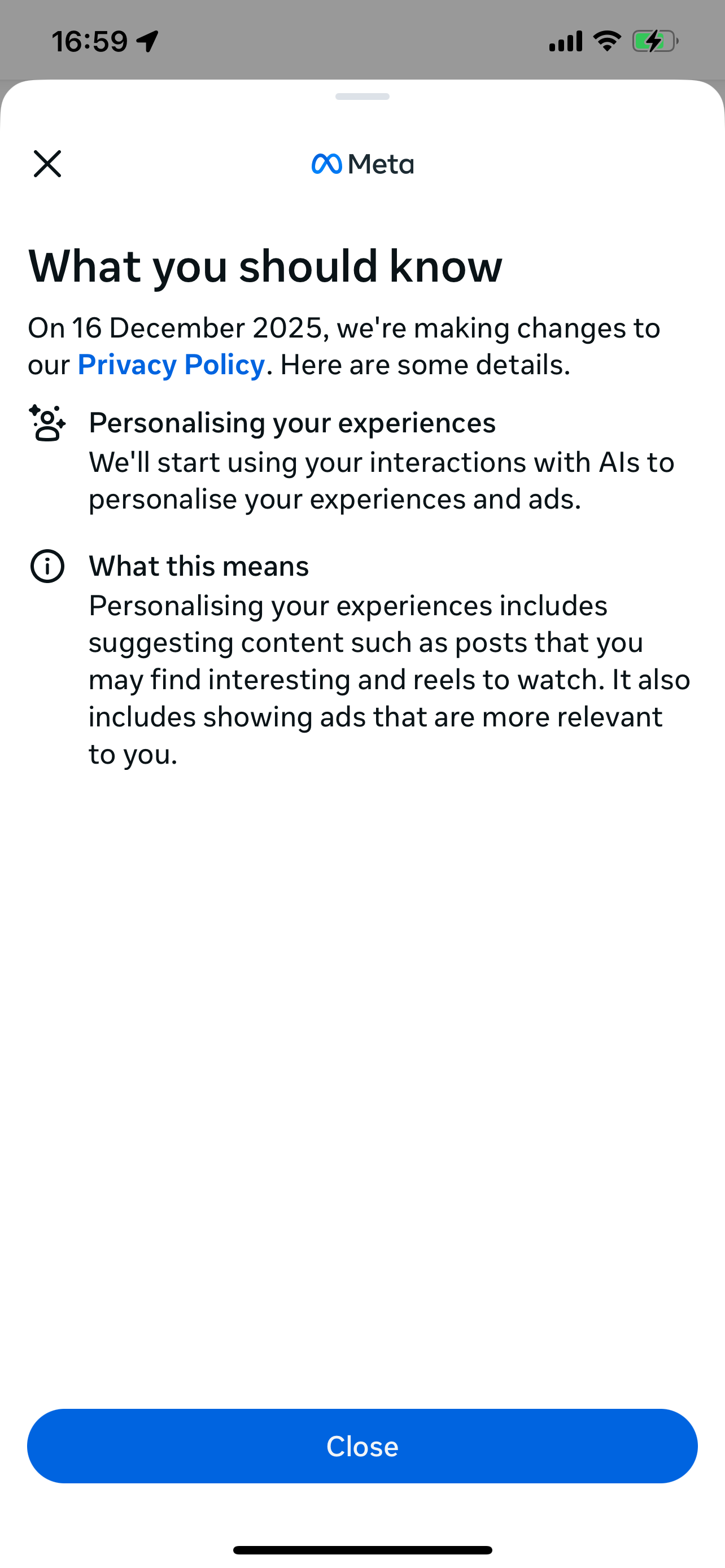

Yesterday I received a “privacy update” notification in my Facebook app that Meta will start mining the conversations I have with its AI tools to “personalise your experience.”

But what does this really mean? —> Hint: it’s not about personalisation.

It means Meta now acknowledges that it can (and will) read and analyse EVERYTHING we type (or voice) into its AI tools — on Facebook, Instagram, and even those Meta Ray-Ban smart glasses. They assure us that they will use that information only to target us with ads and push more addictive content that may better launch us into a death scroll.

You might be chatting innocently about your dream trip to Indonesia or exploring a possible new interest in yoga. But you might also be exploring your mental well-being, asking about sexual health, or wondering whether you’re in a toxic relationship. You could be questioning your religion, sharing concerns over the increasing fascism of your government, brainstorming campaigns against genocide, or trying to understand gender identity or addiction.

Without grasping the safety and privacy implications of many of the AI tools out there, including those from Meta, people are sharing more of their personal, sensitive, exploratory, and deeply private feelings and emotions with artificial intelligence.

Meta has said it won’t use certain categories — like religion, health, sexual orientation, or political views — to serve ads and discover content. But we’ve heard these kinds of promises from them before. Where do they draw the line? Will humans be in the loop? How can we trust them when they can’t even weed out scammy ads or misidentify hate speech and political content? How many “technical glitches” will we have to put up with?

But here’s the most frustrating bit: unless you live in a few places like the EU, UK, or South Korea (countries with strict privacy laws), you have no ability to opt out.

That’s right. You cannot tell Meta to stop listening in on your interactions with their AI tools. What’s more, THEY will be the ones to decide if the conversation was too personal or sensitive or political to mine for data insights. They decide!

Meta calls this “personalising your experience.” I think it’s more like colonising our experiences.

What you need to know is that this kind of language is corporate spin.

It’s exactly like how corporations refer to “cloud computing” to make people forget about the water, land, and energy costs of data centers. Similarly, “personalisation” makes us feel special and considered, and helps us forget that the deepest recesses of our private lives are being surveilled, sorted, and sold — without real consent. And now without any ability to opt out.

Similarly, “personalisation” makes us feel special and considered, and helps us forget that the deepest recesses of our private lives are being surveilled, sorted, and sold — without real consent. And now without any ability to opt out.

Can we just be clear that this is NOT okay? And also not only about ads and recommendation algorithms. This is also about trust, and more importantly, power. And the implications are significant.

If Meta can harvest our AI conversations for data and insights (for free) to improve their ability to sell more ads, sustain more eyeballs, and therefore make more money, what’s to stop them from being coerced into handing it over to a government, say, on national security grounds or as a favour for better market accessibility?

And now that authoritarian actors everywhere KNOW Meta can harvest the conversations, retain data, extract insights, and take action — what happens when they demand this info from Meta, too? That same data can be used to suppress dissent, influence elections, or assist in policing and surveillance. Imagine what ICE, border agencies, and other authoritarian actors could do.

Remember, Meta doesn’t have a great track record here. They paid a $5 billion fine to the FTC for privacy violations — after already violating a 2012 consent decree. They allowed Cambridge Analytica to siphon personal data from millions of users to influence elections. They’ve repeatedly shown that scale and profit come before safety, accountability, or the public interest.

And yet, they want us to “trust” them to protect our sensitive AI interactions?

If they truly cared about users’ privacy, they’d adopt the highest global standards by default, not just where the law compels them to. This isn’t a privacy issue. It’s a profit issue. And a power issue.

AI is being integrated into our everyday digital experiences at warp speed. And companies like Meta are laying the groundwork for a future where everything we say — even in vulnerable, private moments — becomes data to mine.

Our lives are becoming extractable. Our lives are being colonised.

Don’t believe that this is just about the ads.

It’s about surveillance. And it’s time we called it what it is.

⬇︎